Realistic Synthetic Dataset

There was interest in my company about making a synthetic dataset of basic human movement, so I started to look into that. Using what I had learned from Cesium, I started introducing 3d models of people with animations. I found resource packs on the Epic store of photoscanned people and walking animations. I stitched it together with the Cesium environment and got a result that was pretty real looking.

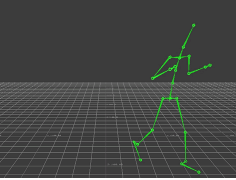

This evolved from a tiny proof of concept to actual work that I was tasked with for a few months. Although I can’t show the final result, I will describe the improvements I made. Firstly, I didn’t stick with Cesium or the photoscanned character models. I found 3 realistic looking maps on the Epic store, and I went with Mixamo for everything else. I think Mixamo is owned by Adobe, but it is a wonderful website to find free characters and animations. The best part about their animations is that they are all done through Motion Capture. So it was nice to think that the character’s animation would be rooted in reality. Most importantly, any character that you find in Mixamo can be used with any animation you find in Mixamo. If you don’t know, the static mesh of a character must have the same skeleton that the animation was made with. One of the most painful processes to undergo is retargeting a skeleton to fit mismatching meshes and animations. I personally went through this when I was trying to use motion capture data from a research study of people walking. The image of the green skeleton came from a university dataset. This skeleton differs from the Mixamo skeleton in the hips. I tried my best but was unable to retarget it. I even downloaded AUTOCAD MotionBuilder, but it was too hard.

Knowing that I was going to use Mixamo, I started organizing all my player models and animations. I started to build blueprints in the Unreal Engine. For those who don’t know, Unreal Engine has a visual coding tool called blueprints, instead of having to program in c++, developers can write code in what looks like a wireframe. These blueprints controlled character spawning and navigation. Multiple cameras were set up to record an event, and they were saved locally to my machine. Lastly, there was no need for human annotations (like bounding boxes for object detection) since the scene was repeated a second time. During the second recording, all characters are shaded entirely in one color. This color can then be isolated each frame, and the bounding box corners can be found that way.