My Intro to Game Engines

I’ve been a little all over the place when it comes to video game engines. My approach has generally been from a Computer Vision perspective. Here I talk about some of the first ideas I had in game engines. In the next couple posts you will see that I start to focus specifically on building synthetic datasets, digital twins, and visualizing LiDAR data.

Point Cloud Visualization & Cesium

I was already familiar with Unity and Unreal just from playing a bunch of video games. Once I started working on my LiDAR project at work, I picked up an Occulus headset and looked into VR development. I thought there could be some business applications to viewing our 3D scanned spaces in VR. I can’t share any of the videos from it, but the results were ok. At the time (and I think still), there wasn’t a way to natively use point clouds in Unity. I found a plugin someone built and shared on the Unity asset store. Using the plugin, I was able to visualize the point cloud. I also started to become familiar with the APIs available to communicate with VR headsets. At the time I did that project, I believe Unity was using OpenVR, and now most gaming engines use the OpenXR SDK. I was also developing this on my work laptop, and I started to become aware of limitations for running games standalone or linked to a PC. Since my work laptop didn’t have much power to it, I couldn’t hold millions of points in memory, and had to try culling points. In the end, I think the best approach was creating a spherical “fog” around the player where points after a certain distance started to disappear.

Being one of the employees showing interest in video game engines, I was asked to look into Cesium. It was my first time hearing of them, but I learned that they were well known for pretty much having the whole globe mapped out in 3D tiles. They had recently created an Unreal Engine plugin so I went through their tutorial. It was pretty incredible to see the whole globe in the Unreal editor and I could give any latitude & longitude and teleport there. Cesium also has data that can be streamed in like Google satellite-like images and OSM building data (I think owned by Bing). The most interesting was seeing how data you upload into Cesium’s cloud can be streamed in Unreal. For the tutorial, they had multiple city blocks of Denver, Colorado that could be streamed. I didn’t take too much footage but here is an image of me in 3rd person looking around the map. The idea of using real life scenes sparked an interest in data synthesis. Unreal Engine doesn’t have to be used like a video game. I can set up multiple cameras to film around an environment, have an event take place, and have those videos saved as mp4s to pass into a model for training.

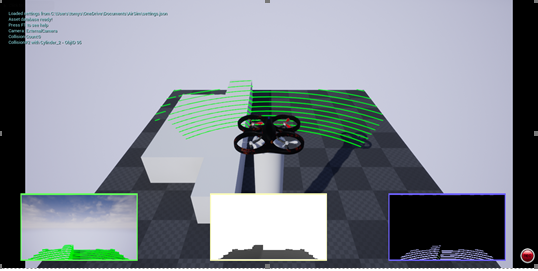

Microsoft AirSim

I spent a little time looking into Microsoft’s AirSim capability in Unreal Engine. The main function of AirSim is for users to practice flying drones. Drone controllers can be plugged into a PC and operate a drone in an Unreal Engine game. AirSim also has a car that has most the same functions as the drone. What interested me the most was that a simulated LiDAR sensor is available on both machines. I started to explore if I could remove the sensor from the device and place it anywhere in the map. You can even control things like wavelength and points per second on these sensors.

I thought about making a synthetic dataset similar to the buildings we scan at work. During this time, COVID was still very prevalent and I was wondering if we had enough data for certain algorithms. It would have been interesting to compare the results of a synthetic point cloud with the real thing.

Although I didn’t get as far as I wanted in a proof of concept, it was difficult even using Microsoft AirSim. I had to even use Windows 11 developer releases to get it to work.